Headline Article at NatureFeaturing our Citation Manipulation Work

The citation black market: schemes selling fake references alarm scientists

Our work on citation manipulation has been recently covered by Nature. In this work, the research team went undercover and contacted a "citation boosting" service. The team managed to buy citations that appeared in a Scopus-indexed journal, providing conclusive evidence that citations can be bought in bulk!!

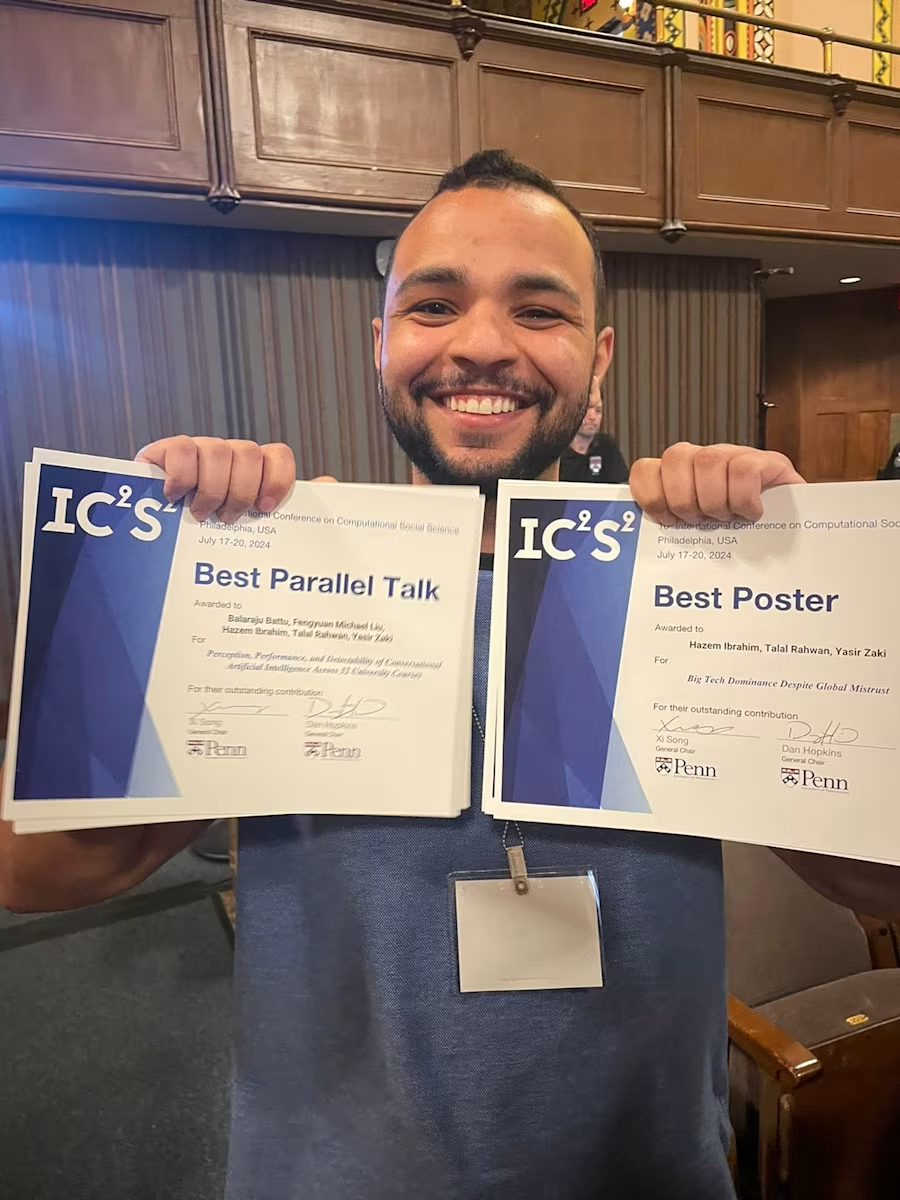

Awards @ IC2S2

Hazem Ibrahim a PhD students working at our lab won two prestigous awards at the international conference for computational social science (IC2S2). He won the best poster award for our "Big Tech Dominance Despite Global Mistrust" paper, and the best parallel talk award for our "Perception, performance, and detectability of conversational artificial intelligence across 32 university courses" paper.

Innovators Under 35 MENA 2023

MIT Technology Review ArabiaMIT Technology Review Arabia announces winners of Innovators Under 35 MENA 2023

Hazem Ibrahim a PhD students working at our lab was named as one of the 2023 MIT Technology Review's innovators under 35. Hazem is is working on building frameworks to analyze the impact of generative artificial intelligence on higher education. Hazem has pioneered the study of ChatGPT detectors in the context of university assessments, as well as methods to obfuscate text to avoid detection. Throughout this process, he collaborated with government officials and experts in education studies to help guide educational policies on AI plagiarism and its impact in the classroom.

The dominance of "Big Tech" companies in global technological and online experiences raises concerns across governmental, economic, and ethical domains. Despite this, there's a lack of comprehensive studies on the impact of public scandals on these companies and global sentiment toward them. This study addresses this gap by analyzing Big Tech's power through acquisitions, market capitalization, and user numbers. It employs the synthetic control method to assess the effects of scandals on the stock prices of two major Big Tech firms, finding no lasting impact. Additionally, the study examines tweets mentioning these scandals, noting their quick disappearance from public attention. Surveying 5300 participants across 25 countries, the study reveals that individuals from countries with lower digital literacy and more authoritarian regimes tend to trust Big Tech more. It also uncovers concerns among respondents about data privacy, with many feeling a lack of control over their data and expressing worries about Big Tech's knowledge and potential surveillance. Moreover, a significant portion of participants feel addicted to Big Tech products and express a desire for more choice in the market. These findings underscore the negative effects of Big Tech's oligopolistic dominance on consumer choice and provide insights for policymakers seeking to address this issue.

Space: the Final Frontier

Paper @CoNext 2023Dissecting the Performance of Satellite Network Operators

The rapid growth of satellite network operators (SNOs) has revolutionized broadband communications, enabling global connectivity and bridging the digital divide. As these networks expand, it is important to evaluate their performance and efficiency. This paper presents the first comprehensive study of SNOs. We take an opportunistic approach and devise a methodology which allows to identify public network measurements performed via SNOs. We apply this methodology to both M-Lab and RIPE public datasets which allowed us to characterize low level performance and footprint of up to 18 SNOs operating in different orbits. Finally, we identify and recruit paid testers on three popular SNOs (Starlink, HughesNet, and ViaSat) to evaluate the performance of popular applications like web browsing and video streaming.

We study the performance of the two most popular location tags (Apple's AirTag and Samsung's SmartTag) through controlled experiments – with a known large distribution of location-reporting devices – as well as in-the-wild experiments – with no control on the number and kind of reporting devices encountered, thus emulating real-life use-cases. We find that both tags achieve similar performance, e.g., they are located 60% of the times in about 10 minutes within a 100 meter radius. It follows that real time stalking via location tags is impractical, even when both tags are concurrently deployed which achieves comparable accuracy in half the time. Nevertheless, half of a victim's movements can be backtracked accurately (10 meter error) with just a one-hour delay.

Invited talk at the IETF-18 MAPRG.

YouYube's Political Recommendation

Paper @PNAS Nexus 2023YouTube's recommendation algorithm is left-leaning in the United States

We analyze YouTube's recommendation algorithm by constructing archetypal users with varying political personas, and examining videos recommended during four stages of each user's life cycle: (i) after their account is created; (ii) as they build a political persona through watching videos of a particular political leaning; (iii) as they try to escape their political persona by watching videos of a different leaning; (iv) as they watch videos suggested by the recommendation algorithm. We find that while the algorithm pulls users away from political extremes, this pull is asymmetric, with users being pulled away from Far-Right content faster than from Far-Left. These findings raise questions on whether recommendation algorithms should exhibit political biases, and the societal implications that such biases could entail.

Mobile Networks Performance

Paper @TMA 2023A WorldWide Look Into Mobile Access Networks Through The Eyes of Amigos

proposes a novel testbed design called "AmiGo", which relies on travelers carrying mobile phones to act as vantage points and collect data on mobile network performance. The AmiGo design has three key advantages: it is easy to deploy, has realistic user mobility, and runs on real Android devices. We further developed a suite of measurement tools for AmiGo to perform network measurements, e.g., pings, speedtests, and webpage loads. We leverage these tools to measure the performance of 24 mobile networks across five continents over a month via an AmiGo deployment involving 31 students. We find that 50% of networks face a 40-70% chance of providing low data rates, only 20% achieve low latencies, and networks in Asia, Central/South America, and Africa have significantly higher CDN download times than in Europe. Most news websites load slowly, while YouTube performs well.

LLMs in Education

Paper @Scientific reports 2023Perception, performance, and detectability of conversational artificial intelligence across 32 university courses

Given the recent emergence of conversational artificial intelligence tools, educational institutions worldwide are facing the significant challenge of addressing the integration of artificial intelligence into educational frameworks. Yet, the literature lacks a systematic study evaluating the performance of such tools on university-level courses and their susceptibility to detection, and also lacks an examination of students' and educators' perspectives on the use of such tools in educational contexts. This work fills these gaps, providing timely and vital insights into the performance of the latest such tool—ChatGPT—and the threat of "AI-plagiarism" that it entails. Our findings can inform policy discussions of how to shape student evaluation frameworks in the age of artificial intelligence.

Homework in the Age of AI

Paper @IEEE Intelligent Systems 2023Rethinking Homework in the Age of Artificial Intelligence

The evolution of natural language processing techniques has led to the development of advanced conversational tools such as ChatGPT, capable of assisting users with a variety of activities. Media attention has centered on ChatGPT's potential impact, policy implications, and ethical ramifications, particularly in the context of education. As such tools become more accessible, students across the globe may use them to assist with their homework. However, it is still unclear whether ChatGPT's performance is advanced enough to pose a serious risk of plagiarism. We fill this gap by evaluating ChatGPT on two introductory and two advanced university-level courses. We find that ChatGPT receives near-perfect grades on the majority of questions in the introductory courses but has not yet reached the level of sophistication required to pass in advanced courses. These findings suggest that, at least for some courses, current artificial intelligence tools pose a real threat that can no longer be overlooked by educational institutions.

Developing regions suffer from poor Internet connection and over reliance on low-end phones, which violates net neutrality—the idea that all Internet traffic should be treated equally. We sent participants to 56 countries to measure global variation in web-browsing experience, revealing significant inequality in mobile data cost and page load time. We also show that popular webpages are increasingly tailored to high-end phones, thereby exacerbating the inequality. Our solution, Lite-Web, makes webpages faster to load and easier to process on low-end phones. Evaluating Lite-Web on the ground reveals that it transforms the browsing experience of Pakistani villagers with low-end phones to that of Dubai residents with high-end phones. These findings call attention from researchers and policy makers to mitigate digital inequality.

Videoconferencing in the Wild

Paper @ACM IMC 2022Performance characterization of videoconferencing in the wild

One important question that we tackle in this paper is: what is the performance of videoconferencing in the wild? Answering this generic question is challenging because it requires, ideally, a world-wide testbed composed of diverse devices (mobile, desktop), operating systems (Windows, MacOS, Linux) and network accesses (mobile and WiFi). In this paper, we present such a testbed that we develop to evaluate videoconferencing performance in the wild via automation for Android and Chromium-based browsers.

Read paper

Muzeel

Paper @ACM IMC 2022Muzeel: assessing the impact of JavaScript dead code elimination on mobile web performance

Muzeel, a black-box approach requiring that aims at removing deadcode (i.e., un-used code) from JavaScript files in today's web. It requires neither the knowledge of the code nor the execution traces. While the state-of-the-art solutions stop analyzing JavaScript when the page loads, the core design principle of Muzeel is to address the challenge of dynamically analyzing JavaScript after the page is loaded, by emulating all possible user interactions with the page, such that the used functions (executed when interactivity events fire) are accurately identified, whereas unused functions are filtered out and eliminated.

Read paperJSAnalyzer is a an easy-to-use tool that enables web developers to quickly optimize JavaScript usage in their pages, and to generate simpler versions of these pages for mobile web users. JSAnalyzer is motivated by the widespread use of non-critical JavaScript elements, i.e., those that have negligible (if any) impact on the page’s visual content and interactive functionality. JSAnalyzer allows the developer to selectively enable or disable JavaScript elements in any given page while visually observing their impact on the page.

Read paper

slimWeb

Paper @ICTD 2022To Block or Not to Block: Accelerating Mobile Web Pages On-The-Fly Through JS Classification

slimWeb is a novel approach that automatically derives lightweight versions of mobile web pages on-the-fly by eliminating the use of unnecessary JavaScript. It consists of a JavaScript classification service powered by a supervised Machine Learning (ML) model that provides insights into each JavaScript element embedded in a web page. slimWeb aims to improve the web browsing experience by predicting the class of each element, such that essential elements are preserved and non-essential elements are blocked.

Read paper

QLUE

Paper @The Webconf 2022QLUE: A Computer Vision Tool for Uniform Qualitative Evaluation of Web Pages

QLUE (QuaLitative Uniform Evaluation) is a tool that automates the qualitative evaluation of web pages generated by web complexity solutions with respect to their original versions using computer vision. QLUE evaluates the content and the functionality of these pages separately using two metrics: QLUE's Structural Similarity, to assess the former, and QLUE's Functional Similarity to assess the latter---a task that is proven to be a challenging for humans given the complex functional dependencies in modern pages.

Read paper

ALCC

Paper @JSYS 2022ALCC: Migrating Congestion Control to the Application Layer in Cellular Networks

Application Layer Congestion Control (ALCC) is a framework that allows any new CC protocol to be implemented easily at the application layer, within or above an application-layer protocol that sits atop a legacy TCP stack. It drives it to deliver approximately the same as the native performance. The ALCC socket sits on top of a traditional TCP socket. Still, it can leverage the large congestion windows opened by TCP connections to carefully execute an application-level CC within the window bounds of the underlying TCP connection.

Read paper

MDI

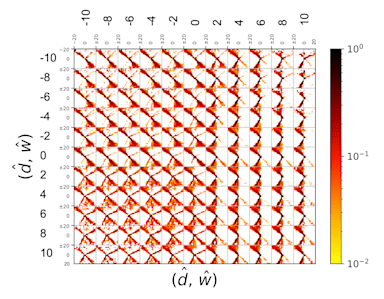

Paper @Sigcomm CCR 2021The Case for Model-Driven Interpretability of Delay-Based Congestion Control Protocols

Model-Driven Interpretability (MDI) is congestion control framework, which derives a model version of a delay-based protocol by simplifying a congestion control protocol's response into a guided random walk over a two-dimensional Markov model. We demonstrate the case for the MDI framework by using MDI to analyze and interpret the behavior of two delay-based protocols over cellular channels: Verus and Copa.

Read paperA tool that enables the automation of the qualitative evaluation of web pages using computer vision. In comparison to humans, PQual can effectively evaluate all the functionality of a web page, whereas the users might skip many of the functional elements during the evaluation.

Read paper

JSCleaner

Paper @The Webconf 2020JSCleaner: De-Cluttering Mobile Webpages Through JavaScript Cleanup

A JavaScript de-cluttering engine that aims at simplifying web pages without compromising the page content or functionality. JSCleaner uses a classification algorithm that classifies JavaScript into three main categories: non-critical, translatable, and critical scripts. JSCleaner removes the non-critical scripts from a web page, replaces the translatable scripts with their HTML outcomes, and preserves the critical scripts.

Read paperAn adaptive congestion control protocol designed for cellular networks. Verus leverages the relation ship between the sending window and the observed network delay by using the delay profile curve. Verus is a delay-based congestion control protocol.

Read paperBe part of the team

We are always on the look out for talented people to join the lab. Whether a research summer internship, a research visit, or a longer term position as a post-doc or research assistant. For inquiries please email yasir.zaki (at) nyu.edu.

News

-

01.09.2022

Hazem Ibrahim has joined our lab as a research assistant. Welcome on board Hazem.

-

23.08.2022

Our paper "Assessing the Impact of JavaScript Dead Code Elimination on Mobile Web Performance" has been accepted at the ACM Internet Measurements Conference IMC 2022 ...

-

23.08.2022

Our paper "Performance Characterization of Videoconferencing in the Wild" has been accepted at the ACM Internet Measurements Conference IMC 2022 ...

-

05.08.2019

Our "Learning Congestion State For mmWave Channels" paper has been accepted at the ACM mmNets 2019 conference...

-

22.12.2018

Dr. Moumena Shaqfa has joined our lab as a postdoctoral associate. Welcome on board.

-

30.06.2017

Our "Xcache: Rethinking Edge Caching" has been accepted at the Information and Communication technologies for Development (ICTD) 2017 conference ...